Above: the Standard Model particles in the existing SU(2)xU(1) electroweak symmetry group (a high-quality PDF version of this table can be found here). The complexity of chiral symmetry - the fact that only particles with left-handed spins (Weyl spinors) experience the weak force - is shown by the different effective weak charges for left and right handed particles of the same type. My argument, with evidence to back it up in this post and previous posts, is that there are no real 'singlets': all the particles are doublets apart from the gauge bosons (W/Z particles) which are triplets. This causes a major change to the SU(2)xU(1) electroweak symmetry. Essentially, the U(1) group which is a source of singlets (i.e., particles shown in blue type in this table which may have weak hypercharge but have no weak isotopic charge) is removed! An SU(2) symmetry group then becomes a source of electric and weak hypercharge, as well as its existing role in Standard Model as a descriptor of the isotopic spin. It modifies the role of the 'Higgs bosons': some such particles are still be required to give mass, but the mainstream electroweak symmetry breaking mechanism is incorrect.

There are 6 rather than 4 electroweak gauge bosons, the same 3 massive weak bosons as before, but 2 new charged massless gauge bosons in addition to the uncharged massless 'photon', B. The 3 massless gauge bosons are all massless counterparts to the 3 massive weak gauge bosons. The 'photon' is not the gauge boson of electromagnetism because, being neutral, it can't represent a charged field. Instead, the 'photon' gauge boson is the graviton, while the two massless gauge bosons are the charged exchange radiation (gauge bosons) of electromagnetism. This allows quantitative predictions and the resolution of existing electromagnetic anomalies (which are usually just censored out of discussions).

It is the U(1) group which falsely introduces singlets. All Standard Model fermions are really doublets: if they are bound by the weak force (i.e., left-handed Weyl spinors) then they are doublets in close proximity. If they are right-handed Weyl spinors, they are doublets mediated by only strong, electromagnetic and gravitational forces, so for leptons (which don't feel the strong force), the individual particles in a doublet can be located relatively far from another (the electromagnetic and gravitational interactions are both long-range forces). The beauty of this change to the understanding of the Standard Model is that gravitation automatically pops out in the form of massless neutral gauge bosons, while electromagnetism is mediated by two massless charged gauge bosons, which gives a causal mechanism that predicts the quantitative coupling constants for gravity and electromagnetism correctly. Various other vital predictions are also made by this correction to the Standard Model.

Above: the fundamental vector boson charges of SU(2). For any particle which has effective mass, there is a black hole event horizon radius of 2GM/c2. If there is a strong enough electric field at this radius for pair production to occur (in excess of Schwinger's threshold of 1.3*1018 v/m), then pairs of virtual charges are produced near the event horizon. If the particle is positively charged, the negatively charged particles produced at the event horizon will fall into the black hole core, while the positive ones will escape as charged radiation (see Figures 2, 3 and particularly 4 below for the mechanism for propagation of massless charged vector boson exchange radiation between charges scattered around the universe). If the particle is negatively charged, it will similarly be a source of negatively charged exchange radiation (see Figure 2 for an explanation of why the charge is never depleted by absorbing radiation from nearby pair production of opposite sign to itself; there is simply an equilibrium of exchange of radiation between similar charges which cancels out that effect). In the case of a normal (large) black hole or neutral dipole charge (one with equal and opposite charges, and therefore neutral as a whole), as many positive as negative pair production charges can escape from the event horizon and these will annihilate one another to produce neutral radiation, which produces the right force of gravity. Figure 4 proves that this gravity force is about 1040 times stronger than electromagnetism. Another earlier post calculates the Hawking black hole radiation rate and proves it creates the force strength involved in electromagnetism.

(For a background to the elementary basics of quantum field theory and quantum mechanics, like the Schroedinger and Dirac equations and their consequences, see the earlier post on The Physics of Quantum Field Theory. For an introduction to symmetry principles, see the previous post.)

The SU(2) symmetry can model electromagnetism (in addition to isospin) because it models two types of charges, hence giving negative and positive charges without the wrong method U(1) uses (where it specifies there are only negative charges, so positive ones have to be represented by negative charges going backwards in time). In addition, SU(2) gives 3 massless gauge bosons, two charged ones (which mediate the charge in electric fields) and one neutral one (which is the spin-1 graviton, that causes gravity by pushing masses together). In addition, SU(2) describes doublets, matter-antimatter pairs. We know that electrons are not produced individidually, only in lepton-antilepton pairs. The reason why electrons can be separated a long distance from their antiparticle (unlike quarks) is simply the nature of the binding force, which is long range electromagnetism instead of a short-range force.

Quantum field theory, i.e., the standard model of particle physics, is based mainly on experimental facts, not speculating. The symmetries of baryons give SU(3) symmetry, those of mesons give SU(2) symmetry. That's experimental particle physics. The problem in the standard model SU(3)xSU(2)xU(1) is the last component, the U(1) electromagnetic symmetry. In SU(3) you have three charges (coded red, blue and green) and form triplets of quarks (baryons) bound by 32-1 = 8 charged gauge bosons mediating the strong force. For SU(2) you have two charges (two isospin states) and form doublets, i.e., quark-antiquark pairs (mesons) bound by 22-1 = 3 gauge bosons (one positively charged, one negatively charged and one neutral).

One problem comes when electromagnetism is represented by U(1) and added to SU(2) to form the electroweak unification, SU(2)xU(1). This means that you have to add a Higgs field which breaks the SU(2)xU(1) symmetry at low energy, by giving masses (at low energy only) to the 3 gauge bosons of SU(2). At high energy, the masses of those 3 gauge bosons must disappear, so that they are massless, like the photon assumed to mediate the electromagnetic force represented by U(1). The required Higgs field which adds mass in the right way for electroweak symmetry breaking to work in the Standard Model but adds complexity and isn't very predictive.

The other, related, problem is that SU(2) only acts on left-handed particles, i.e., particles whose spin is described by a left-handed Weyl spinor. U(1) only has one electric charge, the electron. Feynman represents positrons in the scheme as electrons going backwards in time, and this makes U(1) work, but it has many problems and a massless version of SU(2) is the correct electromagnetism-gravitational model.

So the correct model for electromagnetism is really SU(2) which has two types of electric charge (positive and negative) and acts on all particles regardless of spin, and is mediated by three types of massless gauge bosons: negative ones for the fields around negative charges, positive ones for positive fields, and neutral ones for gravity.

The question then is, what is the corrected Standard Model? If we delete U(1) do we have to replace it with another SU(2) to get SU(3)xSU(2)xSU(2), or do we just get SU(3)xSU(2) in which SU(2) takes on new meaning, i.e., there is no symmetry breaking?

Assume the symmetry group of the universe is SU(3)xSU(2). That would mean that the new SU(2) interpretation has to do all the work and more of SU(2)xU(1) in the existing Standard Model. The U(1) part of SU(2)xU(1) represented both electromagnetism and weak hypercharge, while SU(2) represented weak isospin.

We need to dump the Higgs field as a source for symmetry breaking, and replace it with a simpler mass-giving mechanism that only gives mass to left-handed Weyl spinors. This is because the electroweak symmetry breaking problem has disappeared. We have to use SU(2) to represent isospin, weak hypercharge, electromagnetism and gravity. Can it do all that? Can the Standard Model be corrected by simply removing U(1) to leave SU(3)xSU(2) and having the SU(2) produce 3 massless gauge bosons (for electromagnetism and gravity) and 3 massive gauge bosons (for weak interactions)? Can we in other words remove the Higgs mechanism for electroweak symmetry breaking and replace it by a simpler mechanism in which the short range of the three massive weak gauge bosons distinguishes between electromagnetism (and gravity) from the weak force? The mass giving field only gives mass to gauge bosons that normally interact with left-handed particles. What is unnerving is that this compression means that one SU(2) symmetry is generating a lot more physics than in the Standard Model, but in the Standard Model U(1) represented both electric charge and weak hypercharge, so I don't see any reason why SU(2) shouldn't represent weak isospin, electromagnetism/gravity and weak hypercharge. The main thing is that because it generates the 3 massless gauge bosons, only half of which need to have mass added to them to act as weak gauge bosons, it has exactly the right field mediators for the forces we require. If it doesn't work, the alternative replacement to the Standard Model is SU(3)xSU(2)xSU(2) where the first SU(2) is isospin symmetry acting on left-handed particles and the second SU(2) is electrogravity.

Mathematical review

Following from the discussion in previous posts, it is time to correct the errors of the Standard Model, starting with the U(1) phase or gauge invariance. The use of unitary group U(1) for electromagnetism and weak hypercharge is in error as shown in various ways in the previous posts here, here, and here.

The maths is based on a type of continuous group defined by Sophus Lie in 1873. Dr Woit summarises this very clearly in Not Even Wrong (UK ed., p47): 'A Lie group ... consists of an infinite number of elements continuously connected together. It was the representation theory of these groups that Weyl was studying.

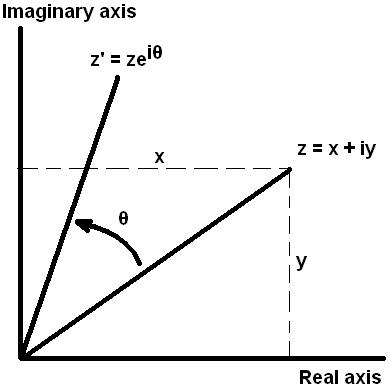

'A simple example of a Lie group together with a representation is that of the group of rotations of the two-dimensional plane. Given a two-dimensional plane with chosen central point, one can imagine rotating the plane by a given angle about the central point. This is a symmetry of the plane. The thing that is invariant is the distance between a point on the plane and the central point. This is the same before and after the rotation. One can actually define rotations of the plane as precisely those transformations that leave invariant the distance to the central point. There is an infinity of these transformations, but they can all be parametrised by a single number, the angle of rotation.

Argand diagram showing rotation by an angle on the complex plane. Illustration credit: based on Fig. 3.1 in Not Even Wrong.

'If one thinks of the plane as the complex plane (the plane whose two coordinates label the real and imaginary part of a complex number), then the rotations can be thought of as corresponding not just to angles, but to a complex number of length one. If one multiplies all points in the complex plane by a given complex number of unit length, one gets the corresponding rotation (this is a simple exercise in manipulating complex numbers). As a result, the group of rotations in the complex plane is often called the 'unitary group of transformations of one complex variable', and written U(1).

'This is a very specific representation of the group U(1), the representation as transformations of the complex plane ... one thing to note is that the transformation of rotation by an angle is formally similar to the transformation of a wave by changing its phase [by Fourier analysis, which represents a waveform of wave amplitude versus time as a frequency spectrum graph showing wave amplitude versus wave frequency by decomposing the original waveform into a series which is the sum of a lot of little sine and cosine wave contributions]. Given an initial wave, if one imagines copying it and then making the copy more and more out of phase with the initial wave, sooner or later one will get back to where one started, in phase with the initial wave. This sequence of transformations of the phase of a wave is much like the sequence of rotations of a plane as one increases the angle of rotation from 0 to 360 degrees. Because of this analogy, U(1) symmetry transformations are often called phase transformations. ...

'In general, if one has an arbitrary number N of complex numbers, one can define the group of unitary transformations of N complex variables and denote it U(N). It turns out that it is a good idea to break these transformations into two parts: the part that just multiplies all of the N complex numbers by the same unit complex number (this part is a U(1) like before), and the rest. The second part is where all the complexity is, and it is given the name of special unitary transformations of N (complex) variables and denotes SU(N). Part of Weyl's achievement consisted in a complete understanding of the representations of SU(N), for any N, no matter how large.

'In the case N = 1, SU(1) is just the trivial group with one element. The first non-trivial case is that of SU(2) ... very closely related to the group of rotations in three real dimensions ... the group of special orthagonal transformations of three (real) variables ... group SO(3). The precise relation between SO(3) and SU(2) is that each rotation in three dimensions corresponds to two distinct elements of SU(2), or SU(2) is in some sense a doubled version of SO(3).'

Hermann Weyl and Eugene Wigner discovered that Lie groups of complex symmetries represent quantum field theory. In 1954, Chen Ning Yang and Robert Mills developed a theory of photon (spin-1 boson) mediator interactions in which the spin of the photon changes the quantum state of the matter emitting or receiving it via inducing a rotation in a Lie group symmetry. The amplitude for such emissions is forced, by an empirical coupling constant insertion, to give the measured Coulomb value for the electromagnetic interaction. Gerald ‘t Hooft and Martinus Veltman in 1970 argued that the Yang-Mills theory is renormalizable so the problem of running couplings having no limits can be cut off at effective limits to make the theory work (Yang-Mills theories use non-commutative algebra, usually called non-commutative geometry). The photon Yang-Mills theory is U(1). Equivalent Yang-Mills interaction theories of the strong force SU(3) and the weak force isospin group SU(2) in conjunction with the U(1) force result in the symmetry group SU(3) x SU(2) x U(1) which is the Standard Model. Here the SU(2) group must act only on left-handed spinning fermions, breaking the conservation of parity.

Dr Woit's Not Even Wrong at pages 98-100 summarises the problems in the Standard Model. While SU(3) 'has the beautiful property of having no free parameters', the SU(2)xU(1) electroweak symmetry does introduce two free parameters: alpha and the mass of the speculative 'Higgs boson'. However, from solid facts, alpha is not a free parameter but the shielding ratio of the bare core charge of an electron by virtual fermion pairs being polarized in the vacuum and absorbing energy from the field to create short range forces:

"This shielding factor of alpha can actually obtained by working out the bare core charge (within the polarized vacuum) as follows. Heisenberg’s uncertainty principle says that the product of the uncertainties in momentum and distance is on the order h-bar. The uncertainty in momentum p = mc, while the uncertainty in distance is x = ct. Hence the product of momentum and distance, px = (mc).(ct) = Et where E is energy (Einstein’s mass-energy equivalence). Although we have had to assume mass temporarily here before getting an energy version, this is just what Professor Zee does as a simplification in trying to explain forces with mainstream quantum field theory (see previous post). In fact this relationship, i.e., product of energy and time equalling h-bar, is widely used for the relationship between particle energy and lifetime. The maximum possible range of the particle is equal to its lifetime multiplied by its velocity, which is generally close to c in relativistic, high energy particle phenomenology. Now for the slightly clever bit:

px = h-bar implies (when remembering p = mc, and E = mc2):

x = h-bar /p = h-bar /(mc) = h-bar*c/E

so E = h-bar*c/x

when using the classical definition of energy as force times distance (E = Fx):

F = E/x = (h-bar*c/x)/x

= h-bar*c/x2.

"So we get the quantum electrodynamic force between the bare cores of two fundamental unit charges, including the inverse square distance law! This can be compared directly to Coulomb’s law, which is the empirically obtained force at large distances (screened charges, not bare charges), and such a comparison tells us exactly how much shielding of the bare core charge there is by the vacuum between the IR and UV cutoffs. So we have proof that the renormalization of the bare core charge of the electron is due to shielding by a factor of a. The bare core charge of an electron is 137.036… times the observed long-range (low energy) unit electronic charge. All of the shielding occurs within a range of just 1 fm, because by Schwinger’s calculations the electric field strength of the electron is too weak at greater distances to cause spontaneous pair production from the Dirac sea, so at greater distances there are no pairs of virtual charges in the vacuum which can polarize and so shield the electron’s charge any more.

"One argument that can superficially be made against this calculation (nobody has brought this up as an objection to my knowledge, but it is worth mentioning anyway) is the assumption that the uncertainty in distance is equivalent to real distance in the classical expression that work energy is force times distance. However, since the range of the particle given, in Yukawa’s theory, by the uncertainty principle is the range over which the momentum of the particle falls to zero, it is obvious that the Heisenberg uncertainty range is equivalent to the range of distance moved which corresponds to force by E = Fx. For the particle to be stopped over the range allowed by the uncertainty principle, a corresponding force must be involved. This is more pertinent to the short range nuclear forces mediated by massive gauge bosons, obviously, than to the long range forces.

"It should be noted that the Heisenberg uncertainty principle is not metaphysics but is solid causal dynamics as shown by Popper:

‘… the Heisenberg formulae can be most naturally interpreted as statistical scatter relations, as I proposed [in the 1934 German publication, ‘The Logic of Scientific Discovery’]. … There is, therefore, no reason whatever to accept either Heisenberg’s or Bohr’s subjectivist interpretation of quantum mechanics.’ – Sir Karl R. Popper, Objective Knowledge, Oxford University Press, 1979, p. 303. (Note: statistical scatter gives the energy form of Heisenberg’s equation, since the vacuum contains gauge bosons carrying momentum like light, and exerting vast pressure; this gives the foam vacuum effect at high energy where nuclear forces occur.)

"Experimental evidence:

‘… we find that the electromagnetic coupling grows with energy. This can be explained heuristically by remembering that the effect of the polarization of the vacuum … amounts to the creation of a plethora of electron-positron pairs around the location of the charge. These virtual pairs behave as dipoles that, as in a dielectric medium, tend to screen this charge, decreasing its value at long distances (i.e. lower energies).’ - arxiv hep-th/0510040, p 71.

"In particular:

‘All charges are surrounded by clouds of virtual photons, which spend part of their existence dissociated into fermion-antifermion pairs. The virtual fermions with charges opposite to the bare charge will be, on average, closer to the bare charge than those virtual particles of like sign. Thus, at large distances, we observe a reduced bare charge due to this screening effect.’ – I. Levine, D. Koltick, et al., Physical Review Letters, v.78, 1997, no.3, p.424."

As for the 'Higgs boson' mass that gives mass to particles, there is evidence there of its value. On page 98 of Not Even Wrong, Dr Woit points out:

'Another related concern is that the U(1) part of the gauge theory is not asymptotically free, and as a result it may not be completely mathematically consistent.'

He adds that it is a mystery why only left-handed particles experience the SU(2) force, and on page 99 points out that: 'the standard quantum field theory description for a Higgs field is not asymptotically free and, again, one worries about its mathematical consistency.'

Another thing is that the 9 masses of quarks and leptons have to be put into the Standard Model by hand together with 4 mixing angles to describe the interaction strength of the Higgs field with different particles, adding 13 numbers to the Standard Model which you want to be explained and predicted.

Important symmetries:

- ‘electric charge rotation’ would transform quarks into leptons and vice-versa within a given family: this is described by unitary group U(1). U(1) deals with just 1 type of charge: negative charge, i.e., it ignores positive charge which is treated as a negative charge travelling backwards in time, Feynman's fatally flawed model of a positron or anti-electron, and with solitary particles (which don't actually exist since particles always are produced and annihilated as pairs). U(1) is therefore false when used as a model for electromagnetism, as we will explain in detail in this post. U(1) also represents weak hypercharge, which is similar to electric charge.

- ‘isospin rotation’ would switch the two quarks of a given family, or would switch the lepton and neutrino of a given family: this is described by symmetry unitary group SU(2). Isospin rotation leads directly to the symmetry unitary group SU(2), i.e., rotations in imaginary space with 2 complex co-ordinates generated by 3 operations: the W+, W-, and Z0 gauge bosons of the weak force. These massive weak bosons only interact with left-handed particles (left handed Weyl spinors). SU(2) describes doublets, matter-antimatter pairs such as mesons and (as this blog post is arguing) lepton-antilepton charge pairs in general (electric charge mechanism as well as weak isospin).

- ‘colour rotation’ would change quarks between colour charges (red, blue, green): this is described by symmetry unitary group SU(3). Colour rotation leads directly to the Standard Model symmetry unitary group SU(3), i.e., rotations in imaginary space with 3 complex co-ordinates generated by 8 operations, the strong force gluons. There is also the concept of 'flavor' referring to the different types of quarks (up and down, strange and charm, top and bottom). SU(3) describes triplets of charges, i.e. baryons.

U(1) is a relatively simple phase-transformation symmetry which has a single group generator, leading to a single electric charge. (Hence, you have to treat positive charge as electrons moving backwards in time to make it incorporate antimatter! This is false because things don't travel backwards in time; it violates causality, because we can use pair-production - e.g. electron and positron pairs created by the shielding of gamma rays from cobalt-60 using lead - to create positrons and electrons at the same time, when we choose.) Moreover, it also only gives rise to one type of massless gauge boson, which means it is a failure to predict the strength of electromagnetism and its causal mechanism of electromagnetism (attractions between dissimilar charges, repulsions between similar charges, etc.). SU(2) must be used to model the causal mechanism of electromagnetism and gravity; two charged massless gauge bosons mediate electromagnetic forces, while the neutral massless gauge boson mediates gravitation. Both the detailed mechanism for the forces and the strengths of the interactions (as well as various other predictions), arise automatically from SU(2) with massless gauge bosons replacing U(1).

Fig. 1: The imaginary U(1) gauge invariance of quantum electrodynamics (QED) simply consists of a description of the interaction of a photon with an electron (e is the coupling constant, the effective electric charge after allowing for shielding by the polarized vacuum if the interaction is at high energy, i.e., above the IR cutoff). When the electron's field undergoes a local phase change, a gauge field quanta called a 'virtual photon' is produced, which keeps the Lagrangian invariant; this is how gauge symmetry is supposed to work for U(1).

This doesn't adequately describe the mechanism by which electromagnetic gauge bosons produce electromagnetic forces! It's just too simplistic: the moving electron is viewed as a current, and the photon (field phase) affects that current by interacting by the electron. There is nothing wrong with this simple scheme, but it has nothing to do with the detailed causal, predictive mechanism for electromagnetic attraction and repulsion, and to make this virtual-photon-as-gauge-boson idea work for electromagnetism, you have to add two extra polarizations to the normal two polarizations (electric and magnetic field vectors) of ordinary photons. You might as well replace the photon by two charged massless gauge bosons, instead of adding two extra polarizations! You have so much more to gain from using the correct physics, than adding extra epicycles to a false model to 'make it work'.

This is Feynman’s explanation in his book QED, Penguin, 1990, p120:

'Photons, it turns out, come in four different varieties, called polarizations, that are related geometrically to the directions of space and time. Thus there are photons polarized in the [spatial] X, Y, Z, and [time] T directions. (Perhaps you have heard somewhere that light comes in only two states of polarization - for example, a photon going in the Z direction can be polarized at right angles, either in the X or Y direction. Well, you guessed it: in situations where the photon goes a long distance and appears to go at the speed of light, the amplitudes for the Z and T terms exactly cancel out. But for virtual photons going between a proton and an electron in an atom, it is the T component that is the most important.)'

The gauge bosons of mainstream electromagnetic model U(1) are supposed to consist of photons with 4 polarizations, not 2. However, U(1) has only one type of electric charge: negative charge. Positive charge is antimatter and is not included. But in the real universe there as much positive as negative charge around!

We can see this error of U(1) more clearly when considering the SU(3) strong force: the 3 in SU(3) tells us there are three types of color charges, red, blue and green. The anti-charges are anti-red, anti-blue and anti-green, but these anti-charges are not included. Similarly, U(1) only contains one electric charge, negative charge. To make it a reliable and complete theory predictive everything, it should contain 2 electric charges: positive and negative, and 3 gauge bosons: positive charged massless photons for mediating positive electric fields, negative charged massless photons for mediating negative electric fields, and neutral massless photons for mediating gravitation. The way this correct SU(2) electrogravity unification works was clearly explained in Figures 4 and 5 of the earlier post: http://nige.wordpress.com/2007/05/25/quantum-gravity-mechanism-and-predictions/

Basically, photons are neutral because if they were charged as well as being massless, the magnetic field generated by its motion would produce infinite self-inductance. The photon has two charges (positive electric field and negative electric field) which each produce magnetic fields with opposite curls, cancelling one another and allowing the photon to propagate:

Fig. 2: charged gauge boson mechanism for electromagnetism, as illustrated by the Catt-Davidson-Walton work in charging up transmission lines like capacitors and checking what happens when you discharge the energy through a sampling oscilloscope. They found evidence, discussed in detail in previous posts on this blog, that the existence of an electric field is represented by two opposite-travelling (gauge boson radiation) light velocity field quanta: while overlapping, the electric fields of each add up (reinforce) but the magnetic fields disappear because the curls of the magnetic field components cancel once there is equilibrium of the exchange radiation going along the same path in opposite directions. Hence, electric fields are due to charged, massless gauge bosons with Poynting vectors, being exchanged between fermions. Magnetic fields are cancelled out in certain configurations (such as that illustrated) but in other situations where you send two gauge bosons of opposite charge through one another (in the figure the gauge bosons modelled by electricity have the same charge), you find that the electric field vectors cancel out to give an electrically neutral field, but the magnetic field curls can then add up, explaining magnetism.

The evidence for Fig. 2 is presented near the end of Catt's March 1983 Wireless World article called 'Waves in Space' (typically unavailable on the internet, because Catt won't make available the most useful of his papers for free): when you charge up x metres of cable to v volts, you do so at light speed, and there is no mechanism for the electromagnetic energy to slow down when the energy enters the cable. The nearest page Catt has online about this is here: the battery terminals of a v volt battery are indeed at v volts before you connect a transmission line to them, but that's just because those terminals have been charged up by field energy which is flowing in all directions at light velocity, so only half of the total energy, v/2 volts, is going one way and half is going the other way. Connect anything to that battery and the initial (transient) output at light speed is only half the battery potential; the full battery potential only appears in a cable connected to the battery when the energy has gone to the far end of the cable at light speed and reflected back, adding to further in-flowing energy from the battery on the return trip, and charging the cable to v/2 + v/2 = v volts.

Because electricity is so fast (light speed for the insulator), early investigators like Ampere and Maxwell (who candidly wrote in the 1873 edition of his Treatise on Electricity and Magnetism, 3rd ed., Article 574: '... there is, as yet, no experimental evidence to shew whether the electric current... velocity is great or small as measured in feet per second. ...') had no idea whatsoever of this crucial evidence which shows what electricity is all about. So when you discharge the cable, instead of getting a pulse at v volts coming out with a length of x metres (i.e., taking a time of t = x/c seconds), you instead get just what is predicted by Fig. 2: a pulse of v/2 volts taking 2x/c seconds to exit. In other words, the half of the energy already moving towards the exit end, exits first. That gives a pulse of v/2 volts lasting x/c seconds. Then the half of the energy going initially the wrong way has had time to go to the far end, reflect back, and follow the first half of the energy. This gives the second half of the output, another pulse of v/2 volts lasting for another x/c seconds and following straight on from the first pulse. Hence, the observer measures an output of v/2 volts lasting for a total duration of 2x/c seconds. This is experimental fact. It was Oliver Heaviside - who translated Maxwell's 20 long-hand differential equations into the four vector equations (two divs, two curls) - who experimentally discovered the first evidence for this when solving problems with the Newcastle-Denmark undersea telegraph cable in 1875, using 'Morse Code' (logic signals). Heaviside's theory is flawed physically because he treated rise times as instantaneous, a flaw inherited by Catt, Davidson, and Walton, which blocks a complete understanding of the mechanisms at work. The Catt, Davidson and Walton history is summarised here.

[The original Catt-Davidson-Walton paper can be found here (first page) and here (second page) although it contains various errors. My discussion of it is here. For a discussion of the two major awards Catt received for his invention of the first ever practical wafer-scale memory to come to market despite censorship such as the New Scientist of 12 June 1986, p35, quoting anonymous sources who called Catt 'either a crank or visionary' - a £16 million British government and foreign sponsored 160 MB 'chip' wafer back in 1988 - see this earlier post and the links it contains. Note that the editors of New Scientist are still vandals today. Jeremy Webb, current editor of New Scientist, graduated in physics and solid state electronics, so he has no good excuse for finding this stuff - physics and electronics - over his head. The previous editor to Jeremy was Dr Alum M. Anderson who on 2 June 1997 wrote to me the following insult to my intelligence: 'I've looked through the files and can assure you that we have no wish to suppress the discoveries of Ivor Catt nor do we publish only articles from famous people. You should understand that New Scientist is not a primary journal and does not publish the first accounts of new experiments and original theories. These are better submitted to an academic journal where they can be subject to the usual scientific review. New Scientist does not maintain the large panel of scientific referees necessary for this review process. I'm sure you understand that science is now a gigantic enterprise and a small number of scientifically-trained journalists are not the right people to decide which experiments and theories are correct. My advice would be to select an appropriate journal with a good reputation and send Mr Catt's work there. Should Mr Catt's theories be accepted and published, I don't doubt that he will gain recognition and that we will be interested in writing about him.' Both Catt and I had already sent Dr Anderson abstracts from Catt's peer-reviewed papers such as IEEE Trans. on Electronic Computers, vol. EC-16, no. 6, Dec. 67. Also Proc. IEE, June 83 and June 87. Also a summary of the book "Digital Hardware Design" by Catt et. al., pub. Macmillan 1979. I wrote again to Dr Anderson with this information, but he never published it; Catt on 9 June 1997 published his response on the internet which he carbon copied to the editor of New Scientist. Years later, when Jeremy Webb had taken over, I corresponded with him by email. The first time Jeremy responded was on an evening in Dec 2002, and all he wrote was a tirade about his email box being full when writing a last-minute editorial. I politely replied that time, and then sent him by recorded delivery a copy of the Electronics World January 2003 issue with my cover story about Catt's latest invention for saving lives. He never acknowledged it or responded. When I called the office politely, his assistant was rude and said she had thrown it away unread without him seeing it! I sent another but yet again, Jeremy wasted time and didn't publish a thing. According to the Daily Telegraph, 24 Aug. 2005: 'Prof Heinz Wolff complained that cosmology is "religion, not science." Jeremy Webb of New Scientist responded that it is not religion but magic. ... "If I want to sell more copies of New Scientist, I put cosmology on the cover," said Jeremy.' But even when Catt's stuff was applied to cosmology in Electronics World Aug. 02 and Apr. 03, it was still ignored by New Scientist! Helene Guldberg has written a 'Spiked Science' article called Eco-evangelism about Jeremy Webb's bigoted policies and sheer rudeness, while Professor John Baez has publicised the decline of New Scientist due to the junk they publish in place of solid physics. To be fair, Jeremy was polite to Prime Minister Tony Blair, however. I should also add that Catt is extremely rude in refusing to discuss facts. Just because he has a few new solid facts which have been censored out of mainstream discussion even after peer-reviewed publication, he incorrectly thinks that his vast assortment of more half-baked speculations are equally justified. For example, he refuses to discuss or co-author a paper on the model here. Catt does not understand Maxwell's equations (he thinks that if you simply ignore 18 out of 20 long hand Maxwell differential equations and show that when you reduce the number of spatial dimensions from 3 to 1, then - since the remaining 2 equations in one spatial dimension contain two vital constants - that means that Maxwell's equations are 'shocking ... nonsense', and he refuses to accept that he is talking complete rubbish in this empty argument), and since he won't discuss physics he is not a general physics authority, although he is expert in experimental research on logic signals, e.g., his paper in IEEE Trans. on Electronic Computers, vol. EC-16, no. 6, Dec. 67.]

Fig. 3: Coulomb force mechanism for electric charged massless gauge bosons. The SU(2) electrogravity mechanism. Think of two flak-jacket protected soldiers firing submachine guns towards one another, while from a great distance other soldiers (who are receding from the conflict) fire bullets in at both of them. They will repel because of net outward force on them, due to successive impulses both from bullet strikes received on the sides facing one another, and from recoil as they fire bullets. The bullets hitting their backs have relatively smaller impulses since they are coming from large distances and so due to drag effects their force will be nearly spent upon arrival (analogous to the redshift of radiation emitted towards us by the bulk of the receding matter, at great distances, in our universe). That explains the electromagnetic repulsion physically. Now think of the two soldiers as comrades surrounded by a mass of armed savages, approaching from all sides. The soldiers stand back to back, shielding one another’s back, and fire their submachine guns outward at the crowd. In this situation, they attract, because of a net inward acceleration on them, pushing their backs toward towards one another, both due to the recoils of the bullets they fire, and from the strikes each receives from bullets fired in at them. When you add up the arrows in this diagram, you find that attractive forces between dissimilar unit charges have equal magnitude to repulsive forces between similar unit charges. This theory holds water!

This predicts the right strength of gravity, because the charged gauge bosons will cause the effective potential of those fields in radiation exchanges between similar charges throughout the universe (drunkard’s walk statistics) to multiply up the average potential between two charges by a factor equal to the square root of the number of charges in the universe. This is so because any straight line summation will on average encounter similar numbers of positive and negative charges as they are randomly distributed, so such a linear summation of the charges that gauge bosons are exchanged between cancels out. However, if the paths of gauge bosons exchanged between similar charges are considered, you do get a net summation.

Fig. 4: Charged gauge bosons mechanism and how the potential adds up, predicting the relatively intense strength (large coupling constant) for electromagnetism relative to gravity according to the path-integral Yang-Mills formulation. For gravity, the gravitons (like photons) are uncharged, so there is no adding up possible. But for electromagnetism, the attractive and repulsive forces are explained by charged gauge bosons. Notice that massless charge electromagnetic radiation (i.e., charged particles going at light velocity) is forbidden in electromagnetic theory (on account of the infinite amount of self-inductance created by the uncancelled magnetic field of such radiation!) only if the radiation is going solely in only one direction, and this is not the case obviously for Yang-Mills exchange radiation, where the radiant power of the exchange radiation from charge A to charge B is the same as that from charge B to charge A (in situations of equilibrium, which quickly establish themselves). Where you have radiation going in opposite directions at the same time, the handedness of the curl of the magnetic field is such that it cancels the magnetic fields completely, preventing the self-inductance issue. Therefore, although you can never radiate a charged massless radiation beam in one direction, such beams do radiate in two directions while overlapping. This is of course what happens with the simple capacitor consisting of conductors with a vacuum dielectric: electricity enters as electromagnetic energy at light velocity and never slows down. When the charging stops, the trapped energy in the capacitor travels in all directions, in equimibrium, so magnetic fields cancel and can’t be observed. This is proved by discharging such a capacitor and measuring the output pulse with a sampling oscilloscope.

The price of the random walk statistics needed to describe such a zig-zag summation (avoiding opposite charges!) is that the net force is not approximately 1080 times the force of gravity between a single pair of charges (as it would be if you simply add up all the charges in a coherent way, like a line of aligned charged capacitors, with linearly increasing electric potential along the line), but is the square root of that multiplication factor on account of the zig-zag inefficiency of the sum, i.e., about 1040 times gravity. Hence, the fact that equal numbers of positive and negative charges are randomly distributed throughout the universe makes electromagnetism strength only 1040/1080 = 10-40 as strong as it would be if all the charges were aligned in a row like a row of charged capacitors (or batteries) in series circuit. Since there are around 1080 randomly distributed charges, electromagnetism as multiplied up by the fact that charged massless gauge bosons are Yang-Mills radiation being exchanged between all charges (including all charges of similar sign) is 1040 times gravity. You could picture this summation by the physical analogy of a lot of charged capacitor plates in space, with the vacuum as the dielectric between the plates. If the capacitor plates come with two opposite charges and are all over the place at random, the average addition of potential works out as that between one pair of charged plates multiplied by the square root of the total number of pairs of plates. This is because of the geometry of the addition. Intuitively, you may incorrectly think that the sum must be zero because on average it will cancel out. However, it isn’t, and is like the diffusive drunkard’s walk where the average distance travelled is equal to the average length of a step multiplied by the square root of the number of steps. If you average a large number of different random walks, because they will all have random net directions, the vector sum is indeed zero. But for individual drunkard’s walks, there is the factual solution that a net displacement does occur. This is the basis for diffusion. On average, gauge bosons spend as much time moving away from us as towards us while being exchanged between the charges of the universe, so the average effect of divergence is exactly cancelled by the average convergence, simplifying the calculation. This model also explains why electromagnetism is attractive between dissimilar charges and repulsive between similar charges.

For some of the many quantitative predictions and tests of this model, see previous posts such as this one.

SU(2), as used in the SU(2)xU(1) electroweak symmetry group, applies only to left-handed particles. So it's pretty obvious that half the potential application of SU(2) is being missed out somehow in SU(2)xU(1).

SU(2) is fairly similar to U(1) in Fig. 1 above, except that SU(2) involves 22 - 1 = 3 types of charges (positive, negative and neutral), which (by moving) generate 2 types of charged currents (positive and negative currents) and 1 neutral current (i.e., the motion of an uncharged particle produces a neutral current by analogy to the process whereby the motion of a charged particle produces a charged current), requiring 3 types of gauge boson (W+, W-, and Z0).

For weak interactions we need the whole of SU(2)xU(1) because SU(2) models weak isospin by using electric charges as generators, while U(1) is used to represent weak hypercharge, which looks almost identical to Fig. 1 (which illustrates the use of U(1) for quantum electrodynamics). The SU(2) isospin part of the weak interaction SU(2)xU(1) applies to only left-handed fermions, while the U(1) weak hypercharge part applies to both types of handedness, although the weak hypercharges of left and right handed fermions are not the same (see earlier post for the weak hypercharges of fermions with different spin handedness).

It is interesting that the correct SU(2) symmetry predicts massless versions of the weak gauge bosons (W+, W-, and Z0). Then the mainstream go to a lot of trouble to make them massive by adding some kind of speculative Higgs field, without considering whether the massless versions really exist as the proper gauge bosons of electromagnetism and gravity. A lot of the problem is that the self-interaction of charged massless gauge bosons is a benefit in explaining the mechanism of electromagnetism (since two similar charged electromagnetic energy currents flowing through one another cancel out each other's magnetic fields, preventing infinite self-inductance, and allowing charged massless radiation to propagate freely so long as it is exchange radiation in equilibrium with equal amounts flowing from charge A to charge B as flow from charge B to charge A; see Fig. 5 of the earlier post here). Instead of seeing how the mutual interactions of charged gauge bosons allow exchange radiation to propagate freely without complexity, the mainstream opinion is that this might (it can't) cause infinities because of the interactions. Therefore, mainstream (false) consensus is that weak gauge bosons have to have a great mass, simply in order to remove an enormous number of unwanted complex interactions! They simply are not looking at the physics correctly.

U(2) and unification

Dr Woit has some ideas on how to proceed with the Standard Model: ‘Supersymmetric quantum mechanics, spinors and the standard model’, Nuclear Physics, v. B303 (1988), pp. 329-42; and ‘Topological quantum theories and representation theory’, Differential Geometric Methods in Theoretical Physics: Physics and Geometry, Proceedings of NATO Advanced Research Workshop, Ling-Lie Chau and Werner Nahm, Eds., Plenum Press, 1990, pp. 533-45. He summarises the approach in http://www.arxiv.org/abs/hep-th/0206135:

‘… [the theory] should be defined over a Euclidean signature four dimensional space since even the simplest free quantum field theory path integral is ill-defined in a Minkowski signature. If one chooses a complex structure at each point in space-time, one picks out a U(2) [is a proper subset of] SO(4) (perhaps better thought of as a U(2) [is a proper subset of] Spin^c (4)) and … it is argued that one can consistently think of this as an internal symmetry. Now recall our construction of the spin representation for Spin(2n) as A *(C^n) applied to a ‘vacuum’ vector.

‘Under U(2), the spin representation has the quantum numbers of a standard model generation of leptons… A generation of quarks has the same transformation properties except that one has to take the ‘vacuum’ vector to transform under the U(1) with charge 4/3, which is the charge that makes the overall average U(1) charge of a generation of leptons and quarks to be zero. The above comments are … just meant to indicate how the most basic geometry of spinors and Clifford algebras in low dimensions is rich enough to encompass the standard model and seems to be naturally reflected in the electro-weak symmetry properties of Standard Model particles…’

The SU(3) strong force (colour charge) gauge symmetry

The SU(3) strong interaction - which has 3 color charges (red, blue, green) and 32 - 1 = 8 gauge bosons - is again virtually identical to the U(1) scheme in Fig. 1 above (except that there are 3 charges and 8 spin-1 gauge bosons called gluons, instead of the alleged 1 charge and 1 gauge boson in the flawed U(1) model of QED, and the 8 gluons carry color charge, whereas the photons of U(1) are uncharged). The SU(3) symmetry is actually correct because it is an empirical model based on observed particle physics, and the fact that the gauge bosons of SU(3) do carry colour makes it a proper causal model of short range strong interactions, unlike U(1). For an example of the evidence for SU(3), see the illustration and history discussion in this earlier post.SU(3) is based on an observed (empirical, experimentally determined) particle physics symmetry scheme called the eightfold way. This is pretty solid experimentally, and summarised all the high energy particle physics experiments from about the end of WWII to the late 1960s. SU(2) describes the mesons which were originally studied in natural cosmic radiation (pions were the first mesons discovered, and they were found in cosmic radiation from outer space in 1947, at Bristol University). A type of meson, the pion, is the long-range mediator of the strong nuclear force between nucleons (neutrons and protons), which normally prevents the nuclei of atoms from exploding under the immense Coulomb repulsion of having many protons confined in the small space of the nucleus. The pion was accepted as the gauge boson of the strong force predicted by Japanese physicist Yukawa, who in 1949 was awarded the Nobel Prize for predicting that meson right back in 1935. So there is plenty of evidence for both SU(3) color forces and SU(2) isospin. The problems all arise from U(1). To give an example of how SU(3) works well with charged gauge bosons, gluons, remember that this property of gluons is responsible for the major discovery of asymptotic freedom of confined quarks. What happens is that the mutual interference of the 8 different types of charged gluons with pairs of virtual quarks and virtual antiquarks at very small distances between particles (high energy) weakens the color force. The gluon-gluon interactions screen the color charge at short distances because each gluon contains two color charges. If each gluon contained just one color charge, like the virtual fermions in pair production in QED, then the screening effect would be most significant at large, rather than short, distances. Because the effective colour charge diminishes at very short distances, for a particular range of distances this color charge fall as you get closer offsets the inverse-square force law effect (the divergence of effective field lines), so the quarks are completely free - within given limits of distance - to move around within a neutron or a proton. This is asymptotic freedom, an idea from SU(3) that was published in 1973 and resulted in Nobel prizes in 2004. Although colour charges are confined in this way, some strong force 'leaks out' as virtual hadrons like neutral pions and rho particles which account for the strong force on the scale of nuclear physics (a much larger scale than is the case in fundamental particle physics): the mechanism here is similar to the way that atoms which are electrically neutral as a whole can still attract one another to form molecules, because there is a residual of the electromagnetic force left over. The strong interaction weakens exponentially in addition to the usual fall in potential (1/distance) or force (inverse square law), so at large distances compared to the size of the nucleus it is effectively zero. Only electromagnetic and gravitational forces are significant at greater distances. The weak force is very similar to the electromagnetic force but is short ranged because the gauge bosons of the weak force are massive. The massiveness of the weak force gauge bosons also reduces the strength of the weak interaction compared to electromagnetism.

The mechanism for the fall in color charge coupling strength due to interference of charged gauge bosons is not the whole story. Where is the energy of the field going where the effective charge falls as you get closer to the middle? Obvious answer: the energy lost from the strong color charges goes into the electromagnetic charge. Remember, short-range field charges fall as you get closer to the particle core, while electromagnetic charges increase; these are empirical facts. The strong charge decreases sharply from about 137e at the greatest distances it extends to (via pions) to around 0.15e at 91 GeV, while over the same range of scattering energies (which are appriximately inversely proportional to the distance from the particle core), the electromagnetic charge has been observed to increase by 7%. We need to apply a new type of continuity equation to the conservation of gauge boson exchange radiation energy of all types, in order to deduce vital new physical insights from the comparison of these figures for charge variation as a function of distance. The suggested mechanism in a previous post is:

'We have to understand Maxwell’s equations in terms of the gauge boson exchange process for causing forces and the polarised vacuum shielding process for unifying forces into a unified force at very high energy. If you have one force (electromagnetism) increase, more energy is carried by virtual photons at the expense of something else, say gluons. So the strong nuclear force will lose strength as the electromagnetic force gains strength. Thus simple conservation of energy will explain and allow predictions to be made on the correct variation of force strengths mediated by different gauge bosons. When you do this properly, you learn that stringy supersymmetry first isn’t needed and second is quantitatively plain wrong. At low energies, the experimentally determined strong nuclear force coupling constant which is a measure of effective charge is alpha = 1, which is about 137 times the Coulomb law, but it falls to 0.35 at a collision energy of 2 GeV, 0.2 at 7 GeV, and 0.1 at 200 GeV or so. So the strong force falls off in strength as you get closer by higher energy collisions, while the electromagnetic force increases! Conservation of gauge boson mass-energy suggests that energy being shielded form the electromagnetic force by polarized pairs of vacuum charges is used to power the strong force, allowing quantitative predictions to be made and tested, debunking supersymmetry and existing unification pipe dreams.'- http://nige.wordpress.com/2007/05/25/quantum-gravity-mechanism-and-predictions/

Force strengths as a function of distance from a particle core

I've written previously that the existing graphs showing U(1), SU(2) and SU(3) force strengths as a function of energy are pretty meaningless; they do not specify which particles are under consideration. If you scatter leptons at energies up to those which so far have been available for experiments, they don't exhibit any strong force SU(3) interactions.What should be plotted is effective strong, weak and electromagnetic charge as a function of distance from particles. This is easily deduced because the distance of closest approach of two charged particles in a head-on scatter reaction is easily calculated: as they approach with a given initial kinetic energy, the repulsive force between them increases, which slows them down until they stop at a particular distance, and they are then repelled away. So you simply equate the initial kinetic energy of the particles with the potential energy of the repulsive force as a function of distance, and solve for distance. The initial kinetic energy is radiated away as radiation as they decelerate. There is some evidence from particle collision experiments that the SU(3) effective charge really does decrease as you get closer to quarks, while the electromagnetic charge increases. Levine and Koltick published in PRL (v.78, 1997, no.3, p.424) in 1997 that the electron's charge increases from e to 1.07e as you go from low energy physics to collisions of electrons at an energy of 91 GeV, i.e., a 7% increase in charge. At low energies, the experimentally determined strong nuclear force coupling constant which is a measure of effective charge is alpha = 1, which is about 137 times the Coulomb law, but it falls to 0.35 at a collision energy of 2 GeV, 0.2 at 7 GeV, and 0.1 at 200 GeV or so.

The full investigation of running-couplings and the proper unification of the corrected Standard Model is the next priority for detailed investigation. (Some details of the mechanism can be found in several other recent posts on this blog, e.g., here.)

'The observed couping constant for W’s is much the same as that for the photon - in the neighborhood of j [Feynman’s symbol j is related to alpha or 1/137.036… by: alpha = j^2 = 1/137.036…]. Therefore the possibility exists that the three W’s and the photon are all different aspects of the same thing. [This seems to be the case, given how the handedness of the particles allows them to couple to massive particles, explaining masses, chiral symmetry, and what is now referred to in the SU(2)xU(1) scheme as ‘electroweak symmetry breaking’.] Stephen Weinberg and Abdus Salam tried to combine quantum electrodynamics with what’s called the ‘weak interactions’ (interactions with W’s) into one quantum theory, and they did it. But if you just look at the results they get you can see the glue [Higgs mechanism problems], so to speak. It’s very clear that the photon and the three W’s [W+, W-, and W0 /Z0 gauge bosons] are interconnected somehow, but at the present level of understanding, the connection is difficult to see clearly - you can still the ’seams’ [Higgs mechanism problems] in the theories; they have not yet been smoothed out so that the connection becomes … more correct.' [Emphasis added.] - R. P. Feynman, QED, Penguin, 1990, pp141-142.Mechanism for loop quantum gravity with spin-1 (not spin-2) gravitons

Peter Woit gives a discussion of the basic principle of LQG in his book:

‘In loop quantum gravity, the basic idea is to use the standard methods of quantum theory, but to change the choice of fundamental variables that one is working with. It is well known among mathematicians that an alternative to thinking about geometry in terms of curvature fields at each point in a space is to instead think about the holonomy [whole rule] around loops in the space. The idea is that in a curved space, for any path that starts out somewhere and comes back to the same point (a loop), one can imagine moving along the path while carrying a set of vectors, and always keeping the new vectors parallel to older ones as one moves along. When one gets back to where one started and compares the vectors one has been carrying with the ones at the starting point, they will in general be related by a rotational transformation. This rotational transformation is called the holonomy of the loop. It can be calculated for any loop, so the holonomy of a curved space is an assignment of rotations to all loops in the space.’ - P. Woit, Not Even Wrong, Jonathan Cape, London, 2006, p189.

I watched Lee Smolin's Perimeter Institute lectures, "Introduction to Quantum Gravity", and he explains that loop quantum gravity is the idea of applying the path integrals of quantum field theory to quantize gravity by summing over interaction history graphs in a network (such as a Penrose spin network) which represents the quantum mechanical vacuum through which vector bosons such as gravitons are supposed to travel in a standard model-type, Yang-Mills, theory of gravitation. This summing of interaction graphs successfully allows a basic framework for general relativity to be obtained from quantum gravity.

It's pretty evident that the quantum gravity loops are best thought of as being the closed exchange cycles of gravitons going between masses (or other gravity field generators like energy fields), to and fro, in an endless cycle of exchange. That's the loop mechanism, the closed cycle of Yang-Mills exchange radiation being exchanged from one mass to another, and back again, continually.

According to this idea, the graviton interaction nodes are associated with the 'Higgs field quanta' which generates mass. Hence, in a Penrose spin network, the vertices represent the points where quantized masses exist. Some predictions from this are here.

Professor Penrose's interesting original article on spin networks, Angular Momentum: An Approach to Combinatorial Space-Time, published in 'Quantum Theory and Beyond' (Ted Bastin, editor), Cambridge University Press, 1971, pp. 151-80, is available online, courtesy of Georg Beyerle and John Baez.

Update (25 June 2007):

Lubos Motl versus Mark McCutcheon's book The Final Theory

Seeing that there is some alleged evidence that mainstream string theorists are bigoted charlatans, string theorist Dr Lubos Motl, who is soon leaving his Assistant Professorship at Harvard, made me uneasy when he attacked Mark McCutcheon's book The Final Theory. Motl wrote a blog post attacking McCutcheon's book by saying that: 'Mark McCutcheon is a generic arrogant crackpot whose IQ is comparable to chimps.' Seeing that Motl is a stringer, this kind of abuse coming from him sounds like praise to my ears. Maybe McCutcheon is not so wrong? Anyway, at lunch time today, I was in Colchester town centre and needed to look up a quotation in one of Feynman's books. Directly beside Feynman's QED book, on the shelf of Colchester Public Library, was McCutcheon's chunky book The Final Theory. I found the time to look up what I wanted and to read all the equations in McCutcheon's book.

Motl ignores McCutcheon's theory entirely, and Motl is being dishonest when claiming: 'his [McCutcheon's] unification is based on the assertion that both relativity as well as quantum mechanics is wrong and should be abandoned.'

This sort of deception is easily seen, because it has nothing to do with McCutcheon's theory! McCutcheon's The Final Theory is full of boring controversy or error, such as the sort of things Motl quotes, but the core of the theory is completely different and takes up just two pages: 76 and 194. McCutcheon claims there's no gravity because the Earth's radius is expanding at an accelerating rate equal to the acceleration of gravity at Earth's surface, g = 9.8 ms-2. Thus, in one second, Earth's radius (in McCutcheon's theory) expands by (1/2)gt2 = 4.9 m.

I showed in an earlier post that there is a simple relationship between Hubble's empirical redshift law for the expansion of the universe (which can't be explained by tired light ideas and so is a genuine observation) and acceleration:

Hubble recession: v = HR = dR/dt, so dt = dR/v, hence outward acceleration a = dv/dt = d[HR]/[dR/v] = vH = RH2

McCutcheon instead defines a 'universal atomic expansion rate' on page 76 of The Final Theory which divides the increase in radius of the Earth over a one second interval (4.9 m) into the Earth's radius (6,378,000 m, or 6.378*106 m). I don't like the fact he doesn't specify a formula properly to define his 'universal atomic expansion rate'.

McCutcheon should be clear: he is dividing (1/2)gt2 into radius of Earth, RE, to get his 'universal atomic expansion rate, XA:

XA = (1/2)gt2/RE,

which is a dimensionless ratio. On page 77, McCutcheon honestly states: 'In expansion theory, the gravity of an object or planet is dependent on it size. This is a significant departure from Newton's theory, in which gravity is dependent on mass.' At first glance, this is a crazy theory, requiring Earth (and all the atoms in it, for he makes the case that all masses expand) to expand much faster than the rate of expansion of the universe.

However, on page 194, he argues that the outward acceleration of the an atom of radius R is:

a = XAR,

now the first thing to notice is that acceleration has units of ms-2 and R has units of m. So this equation is false dimensionally if XA = (1/2)gt2/RE. The only way to make a = XAR accurate dimensionally is to change the definition of XA by dropping t2 from the dimensionless ratio (1/2)gt2/RE to the ratio:

XA = (1/2)g/RE,

which has correct units of s-2. So we end up with this accurate version of McCutcheon's formula for the outward acceleration of an atom of radius R (we will use the average radius of orbit of the chaotic electron path in the ground state of a hydrogen atom for R, which is 5.29*10-11 m):

a = XAR = [(1/2)g/RE]R, which can be equated to Newton's formula for acceleration due to mass m, which is 1.67*10-27 kg:

a = [(1/2)g/RE]R

= mG/R2.

Hence, McCutcheon on page 194 calculates a value for G by rearranging these equations:

G = (1/2)gR3/(REm)

=(1/2)*(9.81)*(5.29*10-11)3 /[(6.378*106)*(1.67*10-27)]

= 6.82*10-11 m3/(kg*s2).

Which is only 2% higher than the measured value of

G = 6.673 *10-11 m3/(kg*s2).

After getting this result on page 194, McCutcheon remarks on page 195: 'Recall ... that the value for XA was arrived at by measuring a dropped object in relation to a hypothesized expansion of our overall planet, yet here this same value was borrowed and successfully applied to the proposed expansion of the tinest atom.'

We can compress McCutcheon's theory: what is he basically saying is the scaling ratio:

a = (1/2)g(R/RE) which when set equal to Newton's law mG/R2, rearranges to give: G = (1/2)gR3/(REm).

However, McCutcheon's own formula is just his guessed scaling law: a = (1/2)g(R/RE).

Although this quite accurately scales the acceleration of gravity at Earth's surface (g at RE) to the acceleration of gravity at the ground state orbit radius of a hydrogen atom (a at R), it is not clear if this is just a coincidence, or if it is really anything to do with McCutcheon's expanding matter idea. He did not derive the relationship, he just defined it by dividing the increased radius into the Earth's radius and then using this ratio in another expression which is again defined without a rigorous theory underpinning it. In its present form, it is numerology. Furthermore, the theory is not universal: ithe basic scaling law that McCutcheon obtains does not predict the gravitational attraction of the two balls Cavendish measured; instead it only relates the gravity at Earth's surface to that at the surface of an atom, and then seems to be guesswork or numerology (although it is an impressively accurate 'coincidence'). It doesn't have the universal application of Newton's law. There may be another reason why a = (1/2)g(R/RE) is a fairly accurate and impressive relationship.

Since I regularly oppose censorship based on fact-ignoring consensus and other types of elitist fascism in general (fascism being best defined as the primitive doctrine that 'might is right' and who speaks loudest or has the biggest gun is the scientifically correct), it is only correct that I write this blog post to clarify the details that really are interesting.

Maybe McCutcheon could make his case better to scientists by putting the derivation and calculation of G on the front cover of his book, instead of a sunset. Possibly he could justify his guesswork idea to crackpot string theorists by some relativistic obfuscation invoking Einstein, such as:

'According to relativity, it's just as reasonable to think as the Earth zooming upwards up to hit you when you jump off a cliff, as to think that you are falling downward.'

If he really wants to go down the road of mainstream hype and obfuscation, he could maybe do even better by invoking the popular misrepresentation of Copernicus:

'According to Copernicus, the observer is at 'no special place in the universe', so it is as justifiable to consider the Earth's surface accelerating upwards to meet you, as vice-versa. Copernicus used a spaceship to travel all throughout the entire universe on a spaceship or a flying carpet to confirm the crackpot modern claim that we are not at a special place in the universe, you know.'

The string theorists would love that kind of thing (i.e., assertions that there is no preferred reference frame, based on lies) seeing that they think spacetime is 10 or 11 dimensional, based on lies.

My calculation of G is entirely different, being due to a causal mechanism of graviton radiation, and it has detailed empirical (non-speculative) foundations to it, and a derivation which predicts G in terms of the Hubble parameter and the local density:

G = (3/4)H2/(rπe3),

plus a lot of other things about cosmology, including the expansion rate of the universe at long distances in 1996 (two years before it was confirmed by Saul Perlmutter's observations in 1998). However, this is not necessarily incompatible with McCutcheon's theory. There are such things as mathematical dualities: where completely different calculations are really just different ways of modelling the same thing.

McCutcheon's book is not just the interesting sort of calculation above, sadly. It also contains a large amount of drivel (particularly in the first chapter) about his alleged flaw in the equation: W = Fd or work energy = force applied * distance moved by force in the direction that the force operates. McCutcheon claims that there is a problem with this formula, and that work energy is being used continuously by gravity, violating conservation of energy. On page 14 (2004 edition) he claims falsely: 'Despite the ongoing energy expended by Earth's gravity to hold objects down and the moon in orbit, this energy never diminishes in strength...'

The error McCutcheon is making here is that no energy is used up unless gravity is making an object move. So the gravity field is not depleted of a single Joule of energy when an object is simply held in one place by gravity. For orbits, gravity force acts at right angles to the distance the moon is going in its orbit, so gravity is not using up energy in doing work on the moon. If the moon was falling straight down to earth, then yes, the gravitational field would be losing energy to the kinetic energy that the moon would gain as it accelerated. But it isn't falling: the moon is not moving towards us along the lines of gravitational force; instead it is moving at right angles to those lines of force. McCutcheon does eventually get to this explanation on page 21 of his book (2004 edition). But this just leads him to write several more pages of drivel about the subject: by drivel, I mean philosophy. On a positive note, McCutcheon near the end of the book (pages 297-300 of the 2004 edition) correctly points out that that where two waves of equal amplitude and frequency are superimposed (i.e., travel through one another) exactly out of phase, their waveforms cancel out completely due to 'destructive interference'. He makes the point that there is an issue for conservation of energy where such destructive interference occurs. For example, Young claimed that destructive interference of light occurs at the dark fringes on the screen in the double-slit experiment. Is it true that two out-of-phase photons really do arrive at the dark fringes, cancelling one another out? Clearly, this would violate conservation of energy! Back in February 1997, when I was editor of Science World magazine (ISSN 1367-6172), I published an article by the late David A. Chalmers on this subject. Chalmers summed the Feynman path integral for the two slits and found that if Young's explanation was correct, then half of the total energy would be unaccounted for in the dark fringes. The photons are not arriving at the dark fringes. Instead, they arrive in the bright fringes.

The interference of radio waves and other phased waves is also known as the Hanbury-Brown-Twiss effect, whereby if you have two radio transmitter antennae, the signal that can be received depends on the distance between them: moving they slightly apart or together changes the relative phase of the transmitted signal from one with respect to the other, cancelling the signal out or reinforcing it. (It depends on the frequencies and amplitude as well: if both transmitters are on the same frequency and have the same output amplitude and radiation power, then perfectly destructive interference if they are exactly out of phase, or perfect reinforcement - constructive interference - if they are exactly in-phase, will occur.) This effect also actually occurs in electricity, replacing Maxwell's mechanical 'displacement current' of vacuum dielectric charges.

Feynman quotation

The Feynman quotation I located is this:

'When we look at photons on a large scale - much larger than the distance required for one stopwatch turn - the phenomena that we see are very well approximated by rules such as 'light travels in straight lines' because there are enough paths around the path of minimum time to reinforce each other, and enough other paths to cancel each other out. But when the space through which a photon moves becomes too small (such as the tiny holes in the screen), these rules fail - we discover that light doesn't have to go in straight lines, there are interferences created by two holes, and so on. The same situation exists with electrons: when seen on a large scale, they travel like particles, on definite paths. But on a small scale, such as inside an atom, the space is so small that there is no main path, no 'orbit'; there are all sorts of ways the electron could go [influenced by the randomly occurring fermion pair-production in the strong electric field on small distance scales, according to quantum field theory], each with an amplitude. The phenomenon of interference becomes very important, and we have to sum the arrows to predict where an electron is likely to go.'

- R. P. Feynman, QED, Penguin, London, 1990, pp. 84-5. (Emphasis added in bold.)

Compare that to:

‘… the ‘inexorable laws of physics’ … were never really there … Newton could not predict the behaviour of three balls … In retrospect we can see that the determinism of pre-quantum physics kept itself from ideological bankruptcy only by keeping the three balls of the pawnbroker apart.’ – Dr Tim Poston and Dr Ian Stewart, ‘Rubber Sheet Physics’ (science article, not science fiction!) in Analog: Science Fiction/Science Fact, Vol. C1, No. 129, Davis Publications, New York, November 1981.

‘… the Heisenberg formulae can be most naturally interpreted as statistical scatter relations [between virtual particles in the quantum foam vacuum and real electrons, etc.], as I proposed [in the 1934 book The Logic of Scientific Discovery]. … There is, therefore, no reason whatever to accept either Heisenberg’s or Bohr’s subjectivist interpretation …’ – Sir Karl R. Popper, Objective Knowledge, Oxford University Press, 1979, p. 303.

Heisenberg quantum mechanics: Poincare chaos applies on the small scale, since the virtual particles of the Dirac sea in the vacuum regularly interact with the electron and upset the orbit all the time, giving wobbly chaotic orbits which are statistically described by the Schroedinger equation - it’s causal, there is no metaphysics involved. The main error is the false propaganda that ‘classical’ physics models contain no inherent uncertainty (dice throwing, probability): chaos emerges even classically from the 3+ body problem, as first shown by Poincare.

Anti-causal hype for quantum entanglement: Dr Thomas S. Love of California State University has shown that entangled wavefunction collapse (and related assumptions such as superimposed spin states) are a mathematical fabrication introduced as a result of the discontinuity at the instant of switch-over between time dependent and time independent versions of Schroedinger at time of measurement.

Just as the Copenhagen Interpretation was supported by lies (such as von Neumann's false 'disproof' of hidden variables in 1932) and fascism (such as the way Bohm was treated by the mainstream when he disproved von Neumann's 'proof' in the 1950s), string 'theory' (it isn't a theory) is supported by similar tactics which are political in nature and have nothing to do with science:

‘String theory has the remarkable property of predicting gravity.’ - Dr Edward Witten, M-theory originator, Physics Today, April 1996. ‘The critics feel passionately that they are right, and that their viewpoints have been unfairly neglected by the establishment. … They bring into the public arena technical claims that few can properly evaluate. … Responding to this kind of criticism can be very difficult. It is hard to answer unfair charges of élitism without sounding élitist to non-experts. A direct response may just add fuel to controversies.’ - Dr Edward Witten, M-theory originator, Nature, Vol 444, 16 November 2006.

*****************************

‘Superstring/M-theory is the language in which God wrote the world.’ - Assistant Professor Lubos Motl, Harvard University, string theorist and friend of Edward Witten, quoted by Professor Bert Schroer, http://arxiv.org/abs/physics/0603112 (p. 21).

‘The mathematician Leonhard Euler … gravely declared: “Monsieur, (a + bn)/n = x, therefore God exists!” … peals of laughter erupted around the room …’ - http://anecdotage.com/index.php?aid=14079